In a previous posts previous post, I described how Dartfish can be used to scan and tag the interesting sections of long videos and assemble those juicy bits into a much shorter, more focused video clip. In this example, I do exactly the opposite. I show how Dartfish can be used to “disassemble” a complex, multi-layered operational video into a sequence of individual actions that can be viewed and studied with much more clarity.

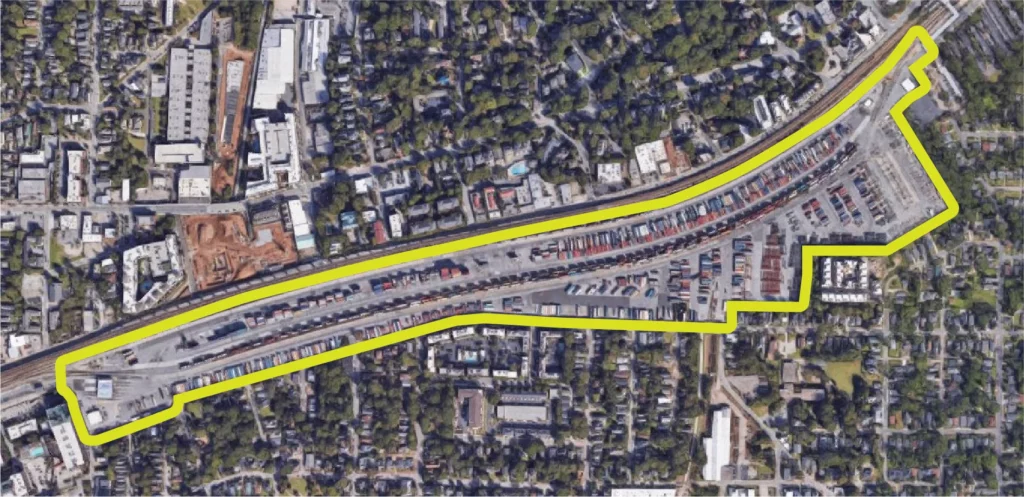

The example starts with a video of trucks trying to drop off and pick up intermodal containers (on trailer chassis) in the CSX Hulsey Intermodal yard in downtown Atlanta. The yard is not that old – just a decade or two. However, space is constrained by its location along a historic rail route in a major urban downtown. I recorded the video about 3 years ago for my supply chain class at Auburn University, shooting from the roof of my loft. I didn’t really need CSX permission to video-record from my home, but I asked … and they were nice enough to agree. You can see the yard location on this map:

The video below was shot from a roof at the west end of the yard … looking east. The confined parking space means that trucks have to pick up and drop off their containers in a complicated setting, with lots of congestion. You don’t have to watch very much of this clip to see how conflicted and seemingly chaotic the action appears.

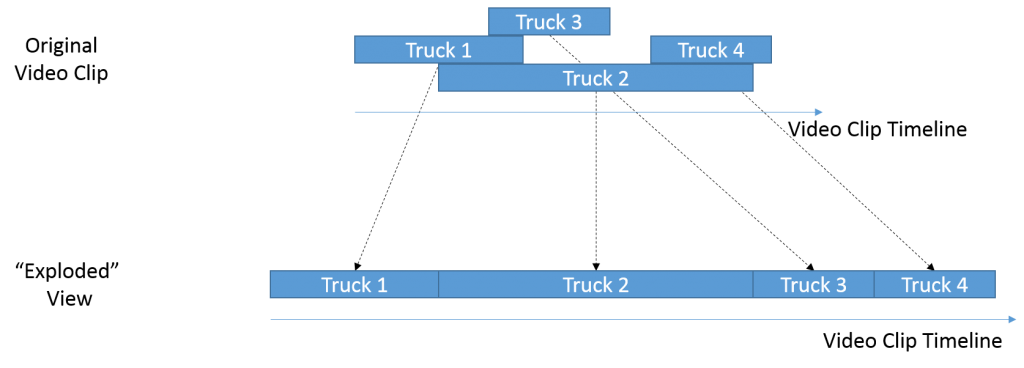

My immediate reaction when I reviewed this video clip was to wonder how could anyone make sense from this seeming “circus”? Everything seems to be happening at once. My first step was to break the video down into discrete durations for each vehicle and study them individually. Fortunately, Dartfish makes this easy. You can apply ‘continuous duration’ tags to specific segments of the video … and these durations can overlap! In addition, the Dartfish Analyzer makes it easy to add labels that appear briefly at the start of each tagged duration to highlight which truck is the object of interest for that segment.

Once the actions of each truck are tagged and labeled, I used another Dartfish feature to export the previously overlapping, tagged durations as separate clips and arrange them back to back on a new ‘storyline’.

When I published this storyline, the result is the clip that you can view below. It is longer than the original, but that is because it breaks out the actions of each truck independently and displays their actions … one after the other. So you can see what the first truck does, then it effectively automatically rewinds so you can follow how the second truck behaves … and so on.

As I indicated at the start, this is the opposite of the process where I produced compressed videos with just a few selected good bits. Here, I have decompressed a seemingly chaotic scene into a series of individual, focused scenes. Someone can now watch the parking behavior for truck after truck … and hopefully start to garner some insights.

Finally, now that the actions are broken out and individualized, I can develop a new tagging protocol at a much finer level of detail (forward/reverse, wait for traffic, turn around … etc.) and apply it easily to this longer “deconstructed” video clip. That could generate detailed data on truck actions movements, delays, etc.

But that is material for another post.